What scientists really think about using AI in research

The scientific journal Nature surveyed over 5,000 scientists worldwide regarding the ethics of using artificial intelligence in academic papers. The survey revealed sharp divisions, Kazinform News Agency correspondent reports.

Respondents were asked to evaluate various scenarios involving the use of AI, including drafting a paper, generating an abstract, reviewing other researchers’ work, and translating text.

Where is the line of acceptability?

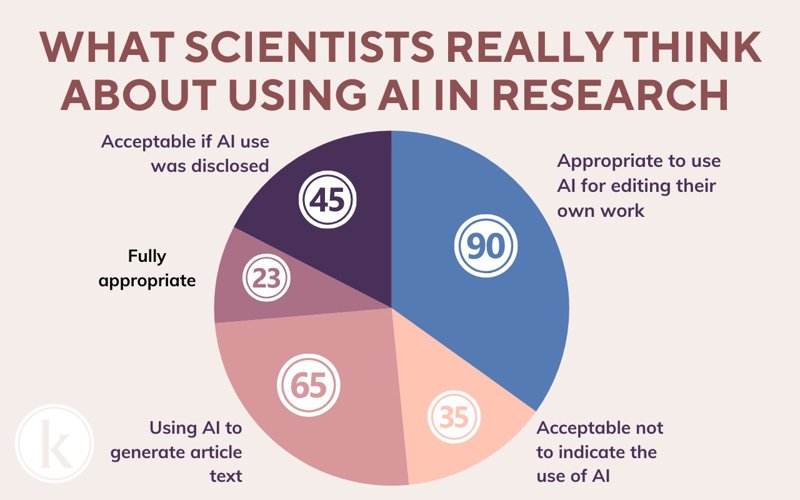

90% of respondents consider it appropriate to use AI for editing and translating their own work. However, opinions remain split on whether AI use should be disclosed. 35% believe it is acceptable not to indicate the use of AI or share the prompts.

65% of scientists approved using AI to generate article text, while a third strongly opposed this practice. The highest level of acceptance was for generating the abstract: 23% of respondents considered it fully appropriate, and another 45% said it was acceptable if AI use was disclosed.

However, attitudes toward other sections were more cautious. A majority of participants said it was inappropriate to use AI to write the Methods (56%), Results (66%), and Discussion/Conclusion (61%) sections.

Over 60% of researchers deemed AI use in peer review unacceptable, especially for drafting initial reviews. Key concerns center around confidentiality and dilution of accountability.

Practice lags behind discussion

Despite widespread availability of AI tools, their actual use in scientific work remains limited:

· Only 28% of respondents have used AI for editing papers

· Just 8% have used it for writing a first draft, making summaries of other articles for use in one’s own paper, translating a paper and supporting peer review

· 65% haven't used AI for any scientific tasks

Younger scientists show greater openness to new technologies. Researchers from non-English-speaking countries are the most active AI users, primarily to overcome language barriers.

Ethics, responsibility, and quality

Many participants noted both advantages and disadvantages. On one hand, AI helps accelerate routine processes and synthesize information. On the other hand, its results are often inaccurate and may contain fabricated references and factual errors. Some complained that AI produces "well-formulated nonsense."

Nevertheless, some scientists advocate for moderate AI use. For example, a humanities researcher from Spain admitted using AI for translation but categorically refuses to assign it text writing or peer review tasks.

Publisher policies

Publisher practices regarding AI remain heterogeneous. Most require disclosure of AI use if it was employed for text generation but allow its undisclosed use for proofreading and editing tasks.

Some journals, like JAMA, require specification of the exact tool and description of its application. Others, such as IOP Publishing, have removed mandatory disclosure requirements, leaving only a recommendation to be transparent.

AI use in the peer review process is most commonly prohibited. For instance, Elsevier and the American Association for the Advancement of Science (AAAS) explicitly forbid reviewers from using generative AI. Meanwhile, Springer Nature and Wiley permit limited use with mandatory disclosure and prohibit uploading materials to online platforms or AI tools.

Earlier, Kazinform News Agency reported that AI chatbots could help combat loneliness by providing social interaction for people lacking real-life friends.